Compiler Design Lab work | 5th Sem | CSE

Date: 06/08/2024

EXPERIMENT NO. – 1

AIM: Write

a C++ program to count the number of different types of tokens in a given program

to simulate Tokenisation in Lexical phase of Compiler Design.

THEORY:

Tokenization in the

lexical phase of compiler design is the process of breaking down the source

code into fundamental units called tokens. These tokens represent the smallest

elements of the code with meaningful semantics, such as keywords, identifiers, literals,

operators, and special symbols. During this phase, the lexer (or lexical

analyzer) scans the input source code sequentially, identifies these tokens

based on predefined patterns, and categorizes them accordingly. This step is

crucial as it transforms raw code into a structured format that can be further

analyzed and processed by the subsequent phases of the compiler, such as syntax

analysis and semantic analysis.

CODE:

#include <iostream>

#include <sstream>

#include <string>

#include <unordered_set>

#include <vector>

#include <cctype>

#include <algorithm>

using namespace std;

bool isKeyword(const string &token)

{

const vector<string> keywords = {

"int",

"float", "double", "char", "void",

"return", "if", "else", "for",

"while", "do", "switch", "case",

"default", "break", "continue",

"class", "public", "private",

"protected", "static", "const",

"typedef", "namespace", "using",

"template", "try", "catch", "throw",

"virtual"};

return find(keywords.begin(),

keywords.end(), token) != keywords.end();

}

bool isOperator(const string &token)

{

const vector<string> operators = {

"+",

"-", "*", "/", "%", "++",

"--", "==", "!=", "<",

">", "<=", ">=",

"&&",

"||", "!", "=", "+=", "-=",

"*=", "/=", "%=", ">>", "<<",

"&", "|", "^", "~"};

return find(operators.begin(),

operators.end(), token) != operators.end();

}

bool isSpecialSymbol(const string &token)

{

const vector<string>

specialSymbols = {

"{",

"}", "(", ")", ";", ",",

"[", "]", "->", "::"};

return find(specialSymbols.begin(),

specialSymbols.end(), token) != specialSymbols.end();

}

int main()

{

unordered_set<string> identifiers;

unordered_set<string> operators;

unordered_set<string>

specialSymbols;

unordered_set<string> keywords;

unordered_set<string> literals;

string input;

stringstream ss;

cout << "Enter the C++

program (end input with two consecutive Enter presses):" << endl;

string line;

bool prevLineEmpty = false;

while (getline(cin, line))

{

if (line.empty())

{

if

(prevLineEmpty)

{

break;

}

prevLineEmpty = true;

}

else

{

prevLineEmpty = false;

ss <<

line << '\n';

}

}

auto tokenize = [](const string

&str)

{

vector<string>

tokens;

string token;

bool inString = false;

for (char ch : str)

{

if (ch ==

'"')

{

if (inString)

{

token += ch;

tokens.push_back(token);

token.clear();

}

else

{

if (!token.empty())

{

tokens.push_back(token);

token.clear();

}

inString = true;

token += ch;

}

}

else if

(isspace(ch) || ch == ';' || ch == ',' || ch == '{' || ch == '}' || ch == '('

|| ch == ')' || ch == '[' || ch == ']' || ch == '.' || ch == '-' || ch == '+'

|| ch == '*' || ch == '/' || ch == '%' || ch == '=' || ch == '!' || ch ==

'<' || ch == '>' || ch == '&' || ch == '|' || ch == '^' || ch == '~')

{

if (inString)

{

token += ch;

}

else

{

if (!token.empty())

{

tokens.push_back(token);

token.clear();

}

if (ch != ' ')

{

tokens.push_back(string(1, ch));

}

}

}

else

{

token += ch;

}

}

if (!token.empty())

{

tokens.push_back(token);

}

return tokens;

};

stringstream inputStream(ss.str());

string lineContent;

while (getline(inputStream,

lineContent))

{

vector<string>

tokens = tokenize(lineContent);

for (const string

&token : tokens)

{

if

(isKeyword(token))

{

keywords.insert(token);

}

else if

(isdigit(token[0]) || (token[0] == '"' && token.back() ==

'"'))

{

literals.insert(token);

}

else if

(isOperator(token))

{

operators.insert(token);

}

else if

(isSpecialSymbol(token))

{

specialSymbols.insert(token);

}

else

{

identifiers.insert(token);

}

}

}

cout << "Identifiers ("

<< identifiers.size() << " distinct):" << endl;

for (const string &id : identifiers)

{

cout << id <<

endl;

}

cout << "\nOperators ("

<< operators.size() << " distinct):" << endl;

for (const string &op : operators)

{

cout << op <<

endl;

}

cout << "\nSpecial Symbols

(" << specialSymbols.size() << " distinct):"

<< endl;

for (const string &sym :

specialSymbols)

{

cout << sym <<

endl;

}

cout << "\nKeywords ("

<< keywords.size() << " distinct):" << endl;

for (const string &kw : keywords)

{

cout << kw <<

endl;

}

cout << "\nLiterals ("

<< literals.size() << " distinct):" << endl;

for (const string &lit : literals)

{

cout << lit <<

endl;

}

return 0;

}

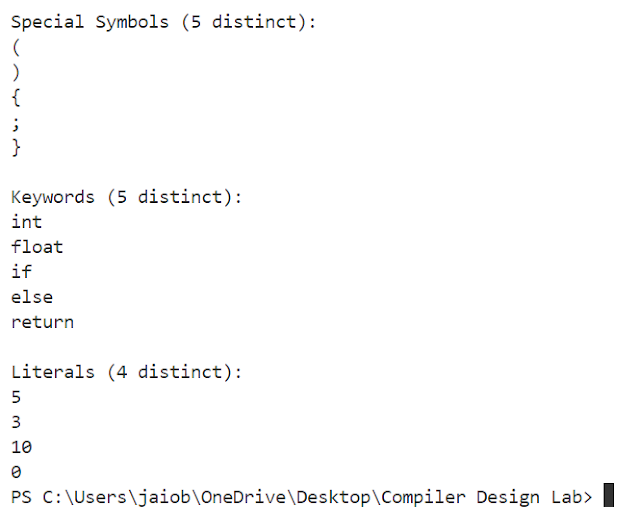

OUTPUT:

Date: 13/08/2024

EXPERIMENT NO. – 2

AIM: Write

a LEX program to count the number of different types of tokens in a given

program to simulate Tokenisation in Lexical phase of Compiler Design.

SOFTWARE USED :

FLEX

THEORY:

LEX is a tool used to

generate lexical analyzers that process text and classify tokens based on

predefined patterns. It is integral in compiling and interpreting as it breaks

down input text into manageable components for further analysis.

Structure of a LEX

Program:

%{

// Definitions Section: Include headers and

macros

%}

%%

// Rules Section: Define patterns and

actions

%%

// User Code Section: Implement main

function and additional logic

Workflow:

- Write a LEX File:

Define patterns and actions in the ‘.l’ file.

- Generate C Source File:

Use flex ‘filename.l’ to produce ‘lex.yy.c’.

- Compile the C File:

Use ‘gcc lex.yy.c’ to create the executable ‘a.exe’.

- Run the Lexer:

Execute ‘./a.exe’ to process input and produce token output.

CODE:

%{

#include <stdio.h>

#include <string.h>

#include <stdlib.h>

#define MAX_TOKENS 100

char *identifiers[MAX_TOKENS];

char *operators[MAX_TOKENS];

char *specialSymbols[MAX_TOKENS];

char *keywords[MAX_TOKENS];

char *literals[MAX_TOKENS];

int idCount = 0, opCount = 0, ssCount = 0, kwCount =

0, litCount = 0;

int exists(char **list, int count, char *token) {

for (int i = 0; i < count; i++) {

if (strcmp(list[i], token)

== 0) {

return 1;

}

}

return 0;

}

void addToken(char **list, int *count, char *token) {

if (!exists(list, *count, token)) {

list[*count] =

strdup(token);

(*count)++;

}

}

%}

%option noyywrap

%%

"int"|"float"|"double"|"char"|"void"|"return"|"if"|"else"|"for"|"while"|"do"|"switch"|"case"|"default"|"break"|"continue"|"class"|"public"|"private"|"protected"|"static"|"const"|"typedef"|"namespace"|"using"|"template"|"try"|"catch"|"throw"|"virtual"

{

addToken(keywords, &kwCount,

yytext);

}

[0-9]+(\.[0-9]+)?|"\"[^\"]*\""

{

addToken(literals, &litCount,

yytext);

}

"+"|"-"|"*"|"/"|"%"|"++"|"--"|"=="|"!="|"<"|">"|"<="|">="|"&&"|"||"|"!"|"="|"+="|"-="|"*="|"/="|"%="|">>"|"<<"|"&"|"|"|"^"|"~"

{

addToken(operators, &opCount,

yytext);

}

"{"|"}"|"("|")"|";"|","|"["|"]"|"->"|"::"

{

addToken(specialSymbols, &ssCount,

yytext);

}

[a-zA-Z_][a-zA-Z0-9_]* {

addToken(identifiers, &idCount,

yytext);

}

[ \t\n] { }

. { }

%%

int main() {

printf("Enter the C++ code (end

input by pressing Enter twice):\n");

char input[1024];

int emptyLineCount = 0;

while (fgets(input, sizeof(input),

stdin)) {

if (strcmp(input,

"\n") == 0) {

emptyLineCount++;

if

(emptyLineCount == 2) {

break;

}

} else {

emptyLineCount = 0;

YY_BUFFER_STATE buffer = yy_scan_string(input);

yylex();

yy_delete_buffer(buffer);

}

}

printf("Identifiers (%d

distinct):\n", idCount);

for (int i = 0; i < idCount; i++) {

printf("%s\n",

identifiers[i]);

}

printf("\nOperators (%d

distinct):\n", opCount);

for (int i = 0; i < opCount; i++) {

printf("%s\n",

operators[i]);

}

printf("\nSpecial Symbols (%d

distinct):\n", ssCount);

for (int i = 0; i < ssCount; i++) {

printf("%s\n",

specialSymbols[i]);

}

printf("\nKeywords (%d

distinct):\n", kwCount);

for (int i = 0; i < kwCount; i++) {

printf("%s\n",

keywords[i]);

}

printf("\nLiterals (%d

distinct):\n", litCount);

for (int i = 0; i < litCount; i++) {

printf("%s\n",

literals[i]);

}

return 0;

}

OUTPUT:

Comments

Post a Comment